|

I am a 5th year Ph.D. student in the Computer Science department at Cornell University, advised by Wen Sun. Before Cornell, I recieved my bachelors in Computer Science and Applied Mathematics from Brown University in 2018, working with Stefanie Tellex and George Konidaris in the Humans to Robots (H2R) Laboratory. CV / Email / Google Scholar / Github / LinkedIn |

|

|

I am interested in machine learning, specifically imitation learning and reinforcement learning, and its intersection with generative models. I am particularly interested in studying how to leverage expert demonstrations and learned feature representations for scalable, efficient reinforcement learning. Recently, I have been invested in investigating imitation learning and reinforcement learning for Large Language Models, improving sample efficiency, reducing reward hacking, and developing improved RLHF algorithms. |

|

Jonathan D. Chang*, Wenhao Zhan*, Kianté Brantley, Dipendra Misra, Jason D. Lee Wen Sun Preprint, 2024 arXiv upcoming New RLHF algorithm with guarantees leveraging the idea of dataset resets, to strictly improve upon PPO with no additional computation costs. |

|

|

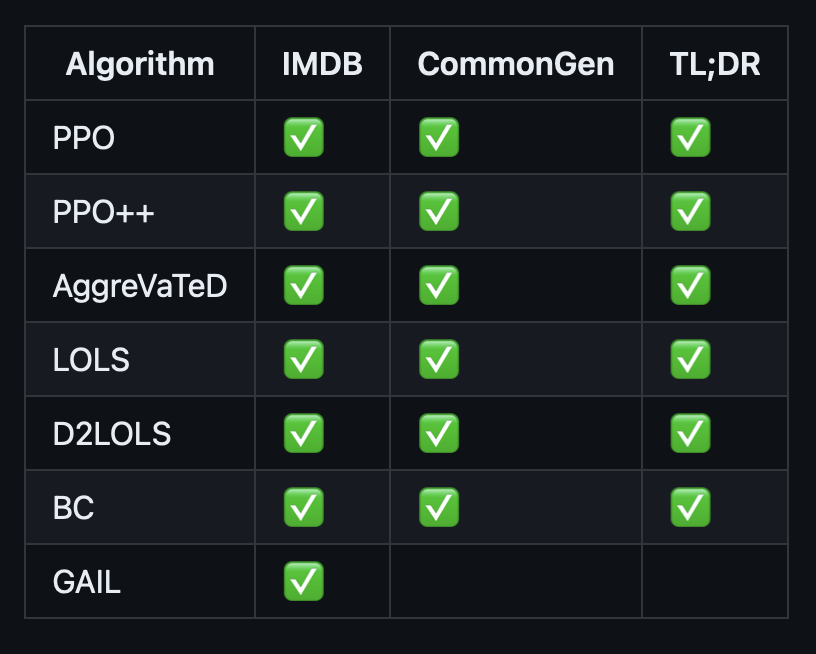

Jonathan D. Chang*, Kianté Brantley*, Rajkumar Ramamurthy, Dipendra Misra, Wen Sun Repository, 2024 Github Developed, distributed research codebase for RL and IL algorithm development with large LLMs. Deepspeed, transformers, PEFT, and FSDP integration. |

|

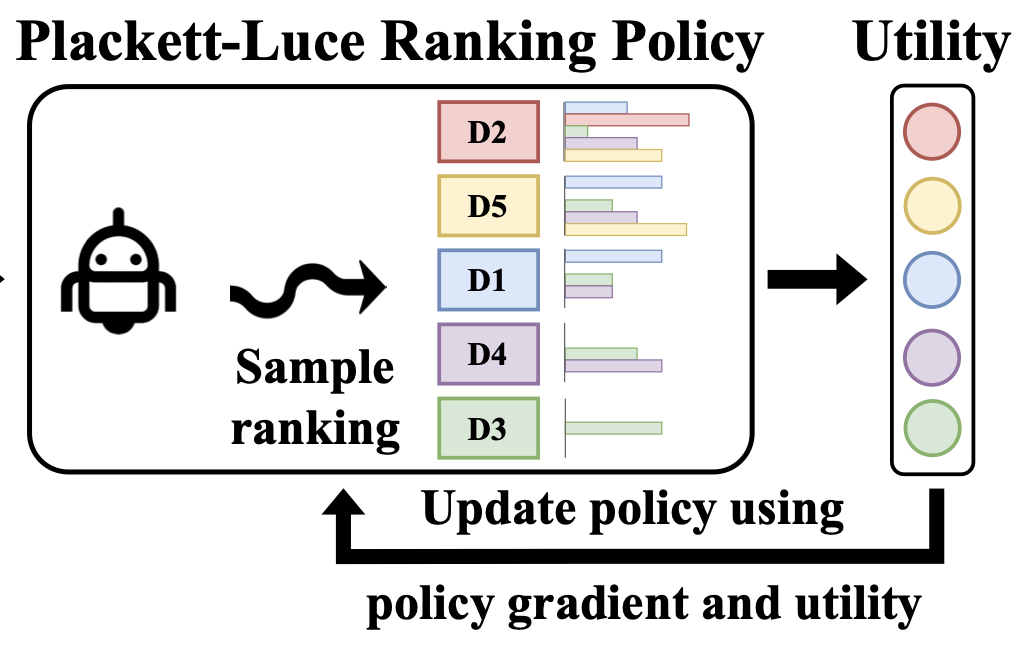

Ge Gao, Jonathan D. Chang*, Claire Cardie, Kianté Brantley*, Thorsten Joachims FMDM@NeurIPS, 2023 arXiv RL finetuning of retrieval models with a Plackett-Luce ranking policy. |

|

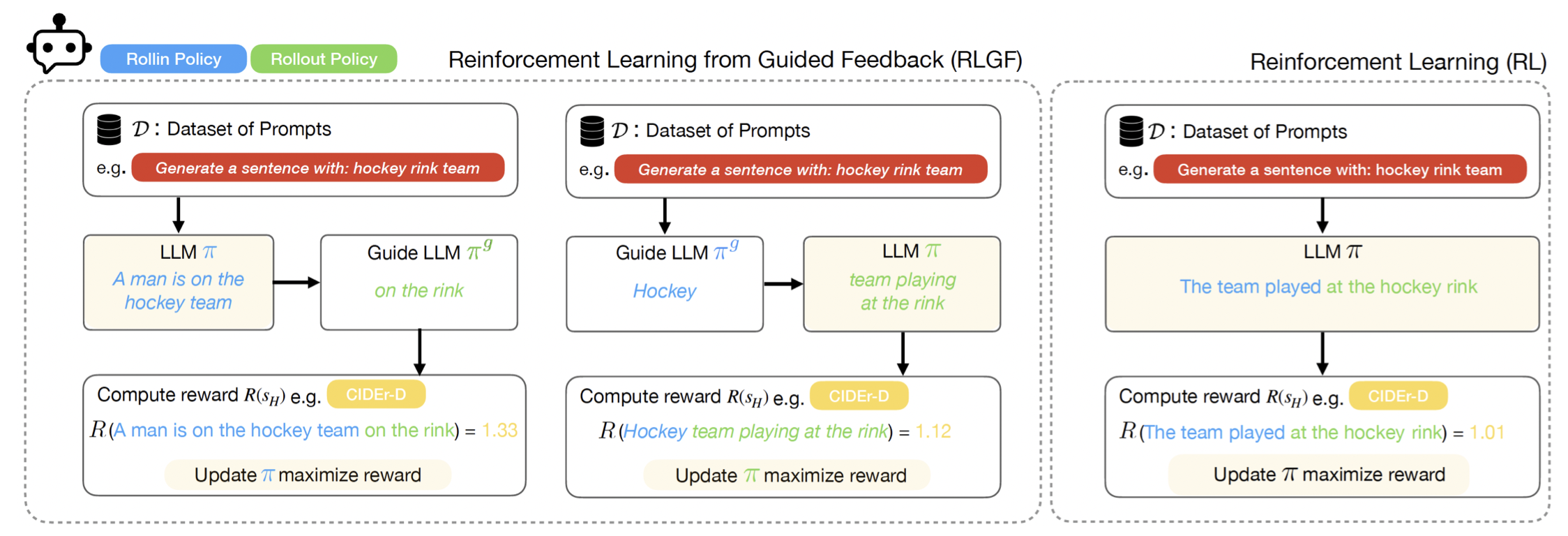

Jonathan D. Chang*, Kianté Brantley*, Rajkumar Ramamurthy, Dipendra Misra, Wen Sun Preprint, 2023 arXiv RLHF algorithmic framework combining two models, our LLM and a suboptimal expert LLM, to improve RLHF performance. |

|

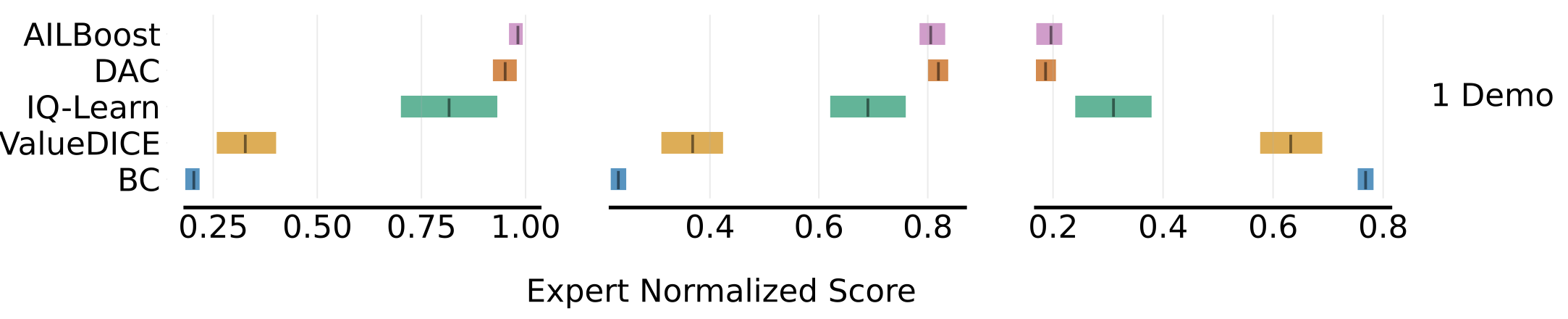

Jonathan D. Chang, Dhruv Sreenivas*, Yingbing Huang*, Kianté Brantley, Wen Sun ICLR 2024, 2024 OpenReview Principled off-policy imitatation learning algorithms through functional gradient boosting. Improves upon SOTA off-policy IL algorithms such as IQLearn. |

|

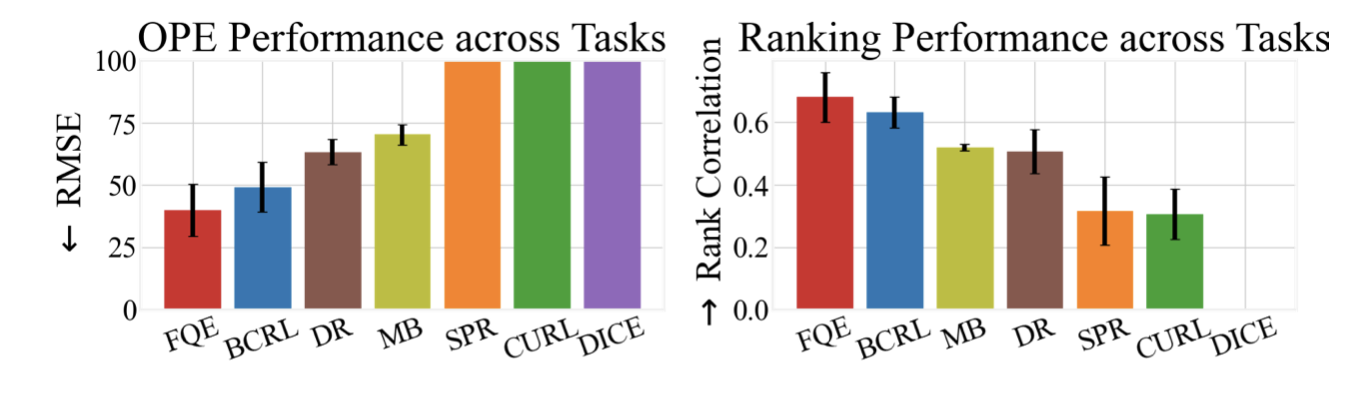

Jonathan D. Chang*, Kaiwen Wang*, Nathan Kallus, Wen Sun ICML 2022 (Long Talk), 2022 code / arXiv Representation learning for Offline Policy Evaluation (OPE) guided by Bellman Completeness and coverage. BCRL achieves state-of-the-art evaluation on image based, continuous control tasks from Deepmind Control Suite. |

|

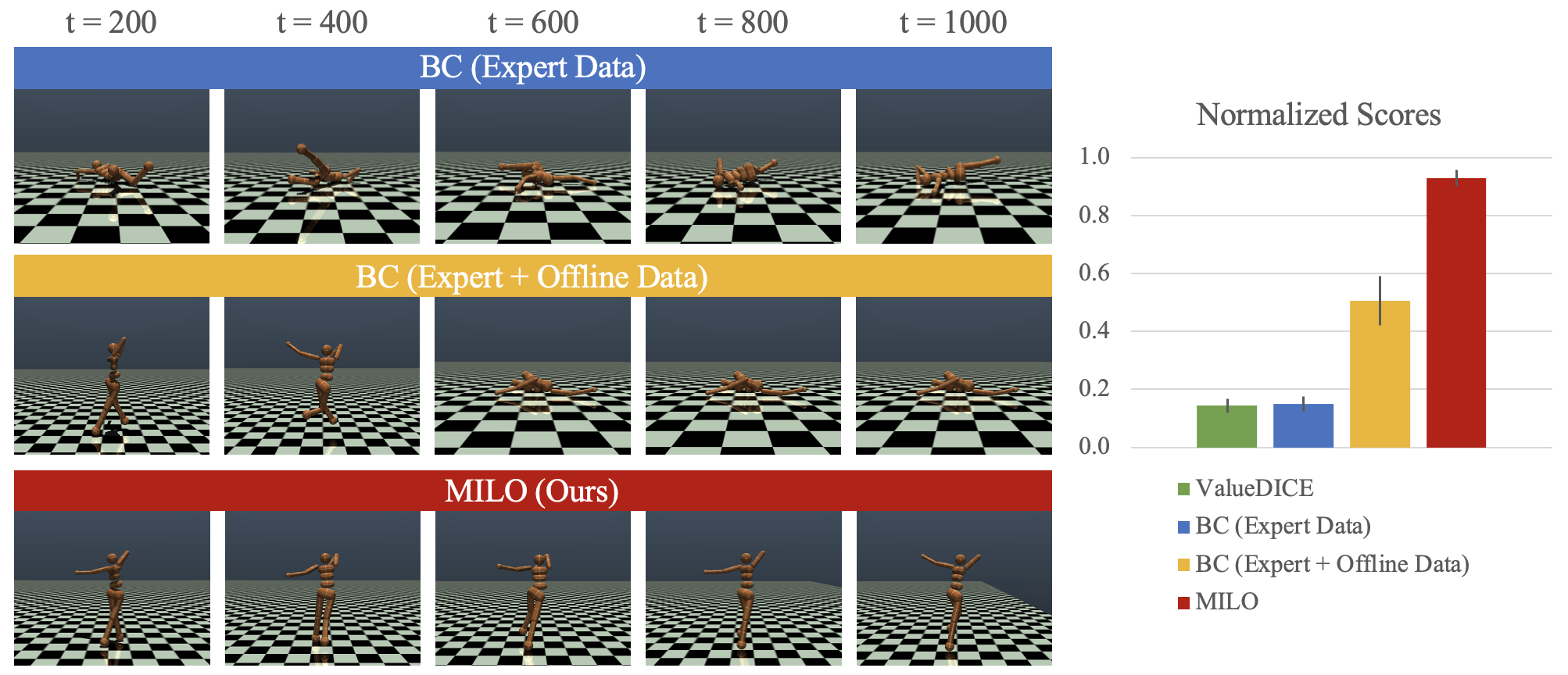

Jonathan D. Chang*, Masatoshi Uehara*, Dhruv Sreenivas, Rahul Kidambi, Wen Sun NeurIPS 2021, 2021 code / arXiv Leveraging offline data with only partial coverage, MILO mitigates covariate shift in imitation learning. |

|

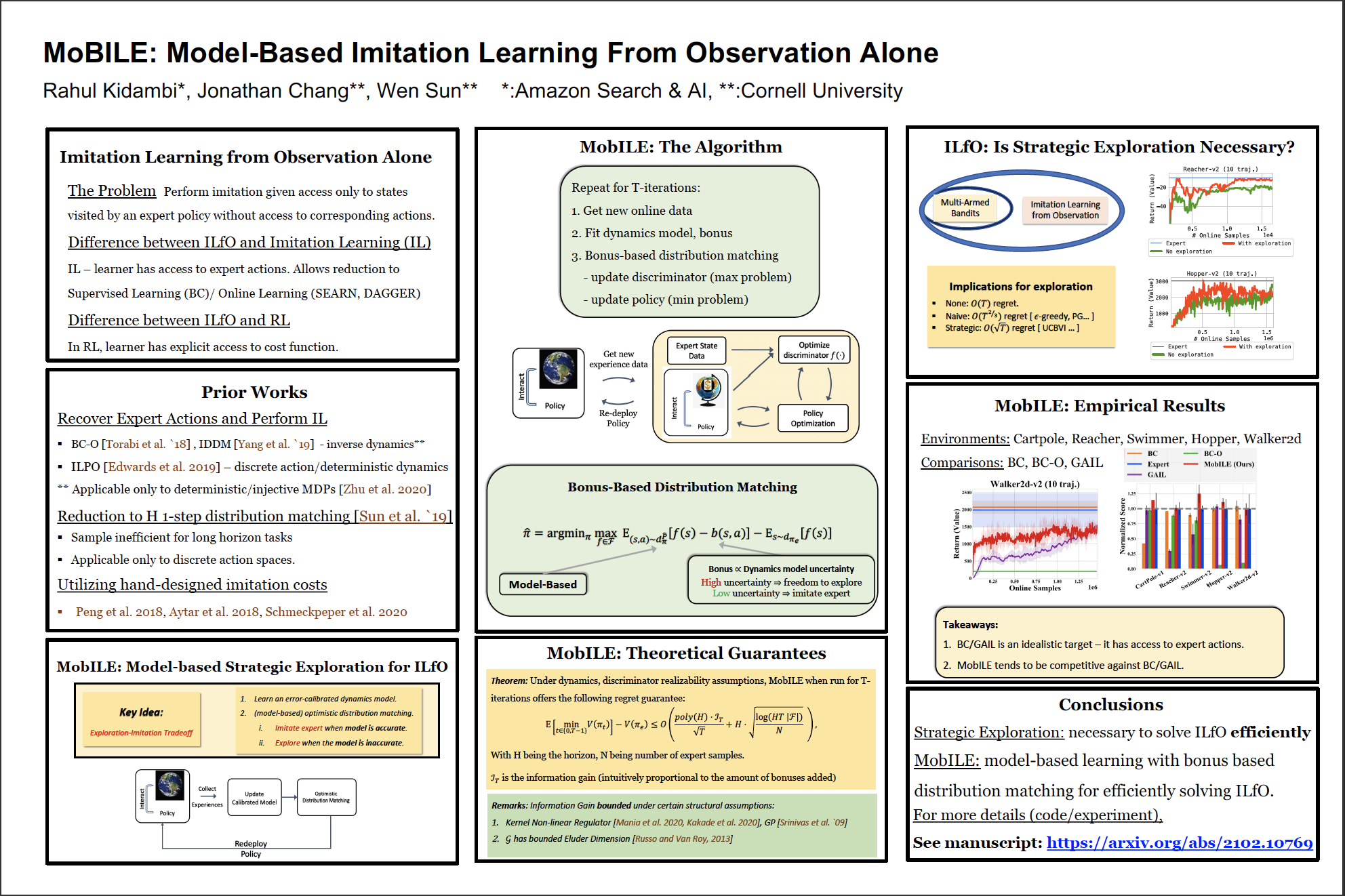

Rahul Kidambi, Jonathan D. Chang, Wen Sun NeurIPS 2021, 2021 arXiv We show that model-based imitation learning from observations (IfO) with strategic exploration can near-optimally solve IfO both in theory and in practice. |

|

|

|

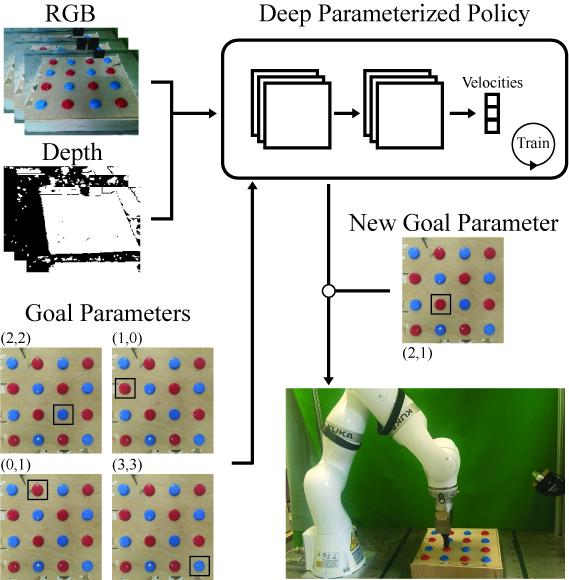

Jonathan D. Chang*, Nishanth Kumar, Sean Hastings, Aaron Gokaslan, Diego Romeres, Devesh Jha, Daniel Nikovski, George Konidaris, Stefanie Tellex arXiv We introduce an end-to-end method for targetable visuomotor skills as a goal-parameterized neual network policy, resulting in successfully learning a mapping from target pixel coordinates to a robot policy. |

|

|

|

Reviewer: NeurIPS 2021, ICML 2022, ICLR 2022, NeurIPS 2022, NeurIPS 2023, ICML 2023, ICLR 2024, NeurIPS 2024, ICML 2024 |

|

Website template is from Jon Barron's website. |